How to test your bot?

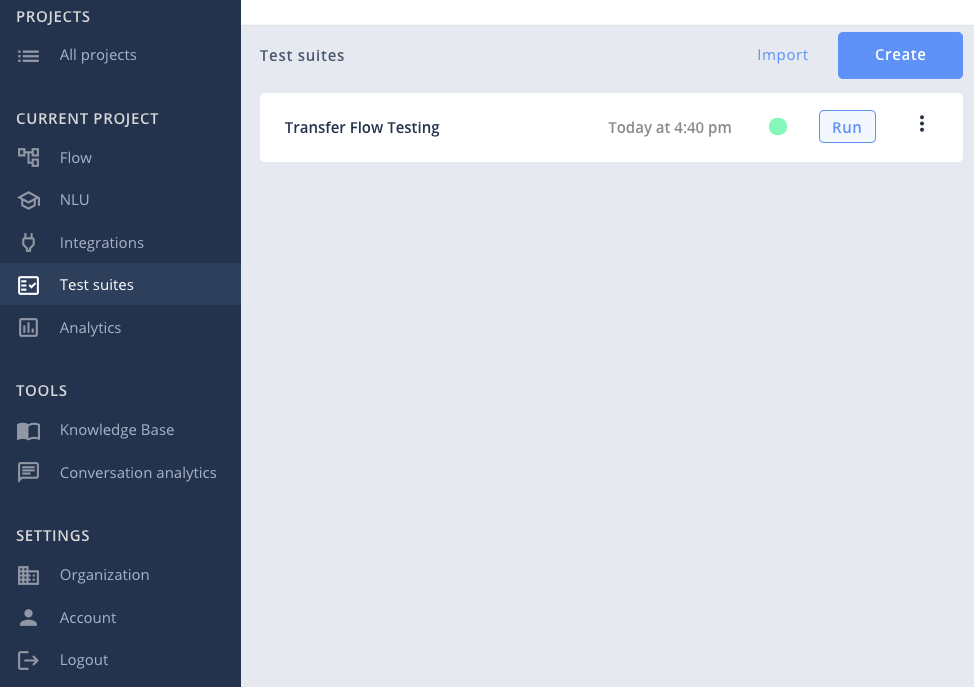

Automate has a built-in bot testing feature called Test Suites. This allows us to write out conversational flows or scenarios and run the conversation through the bot in seconds.

Quite simply, an automated test is a mock conversation. It saves us the trouble of testing the bot message by message and waiting for the response.

How does it work?

The Test Suite dashboard can contain an unlimited amount of saved Test Cases. Each test case contains a conversation scenario, a user to bot conversation.

For example, one test case could be called "Small Talk", in which each small talk intent and response can be tested simultaneously.

- Create: Create a new group of tests

- Import: Upload a test case written in a txt file

- Test suite: Contains different test cases

- Run: Runs the entire group of tests within a test case folder

- Edit: Name, test cases, export, remove

Adding tests

There are two methods to add test cases on the platform:

- Press Create and write the test within the interface

- Import a TXT file

Using Preview

While writing your test case you can easily use the "Test result preview" to validate your test script is correct.

Running a test

After running the test, the user can also see a table summarising the execution result of each test case included in the test suite.

It is also possible to export them to the XLS format from the results view.

Writing Test Cases

Max number of linesThe maximum number of lines in test cases is 2 000. Above this value, the tests may become unstable and generate errors.

Test cases must be written in plain text directly in the interface or on text editor. By using the characters < and > we simulate a conversation between bot and user.

- Each turn of the conversation is written as a line.

- The first turn is always the user and generally a greeting. Writing "hi" will suffice

- Using

*< *signifies a wildcard - Using

?<will treat this line as a regular expression e.g.? <The version of prod- \ d {3} \. \ D works on production. - All HTML and SSML markups are ignored (they do not need to be included in test cases)

Example test case:

> Start

< Hi there, please choose a language.

< Hallo! Bitte wählen Sie eine Sprache.

< Bonjour, veuillez choisir une langue.

< Hola! Seleccione un idioma por favor.

> English 🇬🇧

< Hello! 👋 I'm Bianca van BancoBot. How can I help you?

> transfer money

< Give your full name, please.

> Andrew Fryckowski

< Thank you, Andrew.

< How much money would you like to transfer?

> 20 dollars

< Who would you like to transfer it to?

> Marcella de Rosa

< When should the transfer happen?

> Tomorrow

< Okay, so please confirm the transfer to Marcella de Rosa for amount of 20 $ on the day of 2021-06-18 00:00.➡️ Full description of Test Cases Syntax

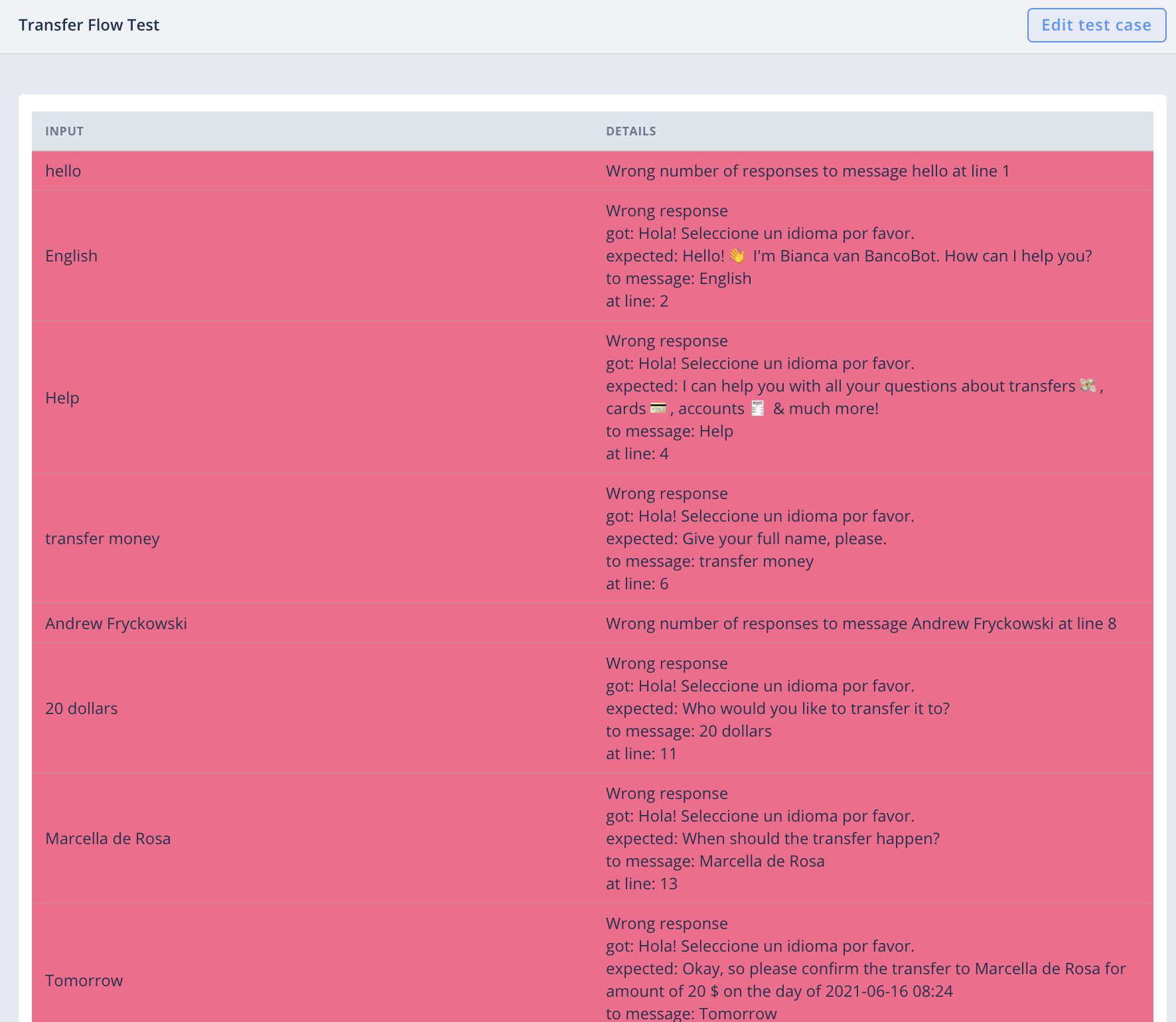

Test result

After running the test, the user can see a table summarising the execution result of each test case. Information about Wrong response occurs when the response of the bot is not the same as defined in the test case i.e. not matching the bot response text exactly.

failed test case

passed test case

⚙️ Test Suite Setup

In the test suite setup, you can put some "test case code" that will be executed before every test case in this test suite, eg. you can mock data for every test case or assert the bot`s first response that is common for every test case.

Updated 4 months ago