Streaming integrations

What is streaming?

HTTP streaming is a technique used to deliver real-time content over the Internet. Instead of returning single response at the end of a request, API returns chunks of data as soon as they're available.

Why is it useful in Automate?

The main purpose of streaming integrations in Automate is to integrate with Large Language Models (LLMs) in a streaming manner. Instead of waiting for a full response, we can start returning it piece by piece.

How to set it up?

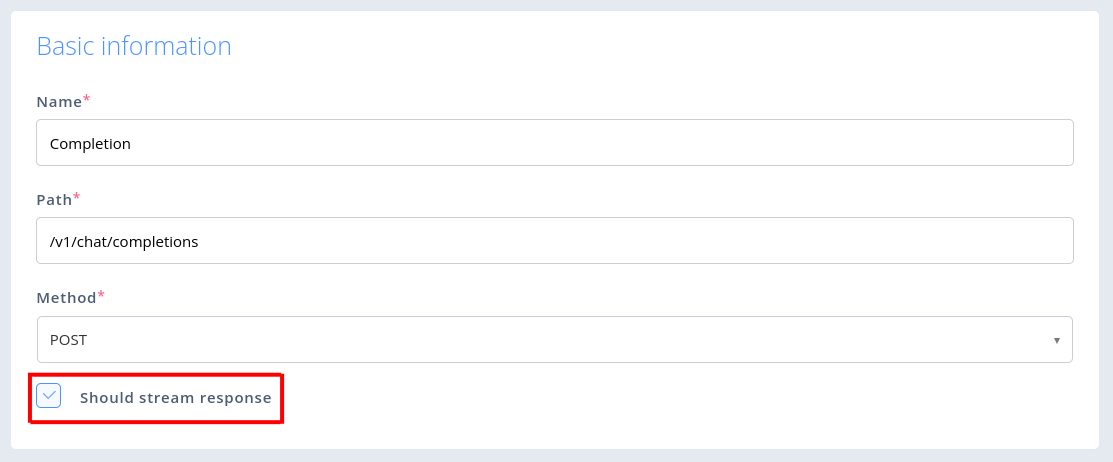

In order to mark a request as streaming, check the "Should stream response" checkbox in the basic information section of the request creation/edit form.

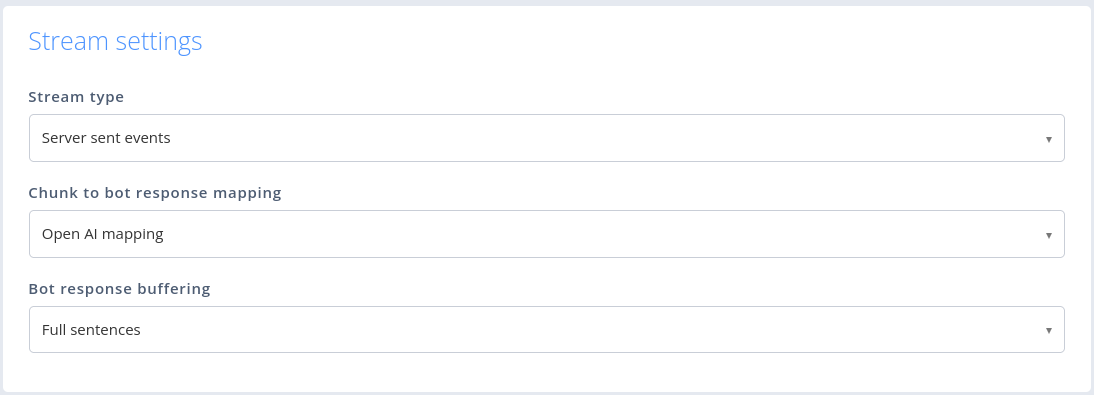

After selecting this option, the "Stream settings" section should appear.

Stream type

Available options are:

- Server sent events

- JSON Per Line

- Raw lines

Different APIs use different formats of data chunks. It should be a part of API documentation.

Chunk to bot response mapping

Available options are:

- Open AI mapping

- No streaming to response

- Return whole chunk

- Custom (if selected, "Chunk to bot response mapping expression" appears)

Bot response mapping defines what part of a chunk should be treated as a bot response. For example, Open AI API returns chunks in the format:

{

"model": "gpt-3.5-turbo-0613",

"choices": [

{

"index": 0,

"delta": {

"content": " you"

},

"finish_reason": null

}

],

"object": "chat.completion.chunk",

"id": "chatcmpl-8Cplcia63B7ZoD8YP43LdsOWvyHj0",

"created": 1698069620

}The actual bot response is in the fieldchoices[0].delta.content and that should be the value of "Chunk to bot response mapping expression" if you select "Custom" mapping.

Since Open AI is the most popular LLM provider, there is a dedicated option "Open AI mapping" which uses exactly the same mapping under the hood.

Bot response buffering

Available options are:

- None

- Words

- Full sentences

Thanks to buffering, you can delay sending the mapped chunk to the user and wait for the next chunks until there is a full word or sentence.

How to use streaming integration on Flow?

You can use it like any other integration, by Integration block. If you selected some "Chunk to bot response mapping", you don't need an additional Say block to pass a response from integration to the user. The integration block will wait for the request to finish, in the meantime passing mapped chunks to the user. When it's done, all chunks are also available as output value "chunks".

Limitations

In order to benefit from response streaming integration, the client has to support consuming partial bot responses in a streaming manner. Currently, only Webchat and Voice Gateway support it.

Updated 4 months ago