Training analytics

As the NLU engine can continuously learn, the user can verify the current performance of the model at any time. Such verification serves to check how accurately the intents for phrases with which the engine has not been trained will be identified in the future. The result is presented in the form of measures, the meaning of which is described later in the document.

The effectiveness verification can be performed in two ways:

- Cross-validation

- Evaluate against Test Dataset

Cross-validation

This function determines the effectiveness based on a statistical method that, for testing purposes, excludes part of the training set, treating it as untrained and testing it with it. Such a test is performed for each intent separately. Cross-validation conduct four independent tests.

ExampleIf the NLU engine has been trained with the phrases "yes", "clear", "of course" and "sure" for the intent "confirmation", then the cross-validation will run 4 independent tests:

- What intent would the engine assign to the phrase "yes" if the intent "confirmation" during training was only assigned the phrases "clear", "of course" and "sure"?

- What intent would the engine assign to the phrase "clear" if it had only been assigned the phrases "yes", "of course" and "sure" to the intent "confirmation" during training?

- What intent would the engine assign to the phrase "of course" if only the phrases "clear", "yes" were assigned to the intent "confirmation" during training? How about "sure"?

- What intent would the engine assign to the phrase "sure" if only the phrases "clear", "of course" and "yes" were assigned to the intent "confirmation" during training?

The confronted results of these tests will indicate the predicted effectiveness of the intent recognition for phrases unknown to the engine during training.

In the above example, the division into 4 subsets was obvious, as the set of phrases contained only 4 phrases. For larger sets, the division into subsets each time is random, so the result of repeated cross-validation may differ slightly each time. However, with smaller sets, it will not be possible to split it into 4 subsets, hence an intent with less than 4 assigned phrases will not be tested.

Cross-validation, due to the need to conduct four independent tests, may take a long time (possible waiting up to 20 minutes). Refreshing the screen while cross-validation is in progress will result in the result not being presented. You should not initiate it on another account/computer or switch to another screen of the application during its duration.

Evaluate against Test Dataset

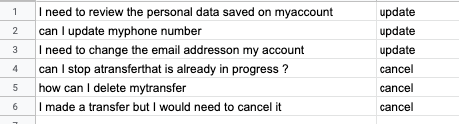

The second way to perform the NLU Training Analytics test is to upload a file containing a list of phrases (regardless of whether the engine has already been trained for these phrases or not) and the related intents.

Here is an example of a supported .csv file:

Use UTF-8 character encoding in the test .csv file, and use commas as data separators (when opening the file as text).

This test allows you to confront the level of effectiveness provided to the engine by the set of phrases and intents used for training, in relation to the phrases imported in the test, treated as a predictor of actual statements in conversations with the bot.

ExampleIf the NLU engine was trained with the phrases: "yes", "clear", "of course" and "sure" for the intent "confirmation", then in the test file you could add new phrases to this intent, e.g. "cool" and "ok" ". A test performed with such a file would indicate the effectiveness of recognizing the intent of "confirmation" in relation to the phrases "cool" and "ok" with which the engine has never been trained.

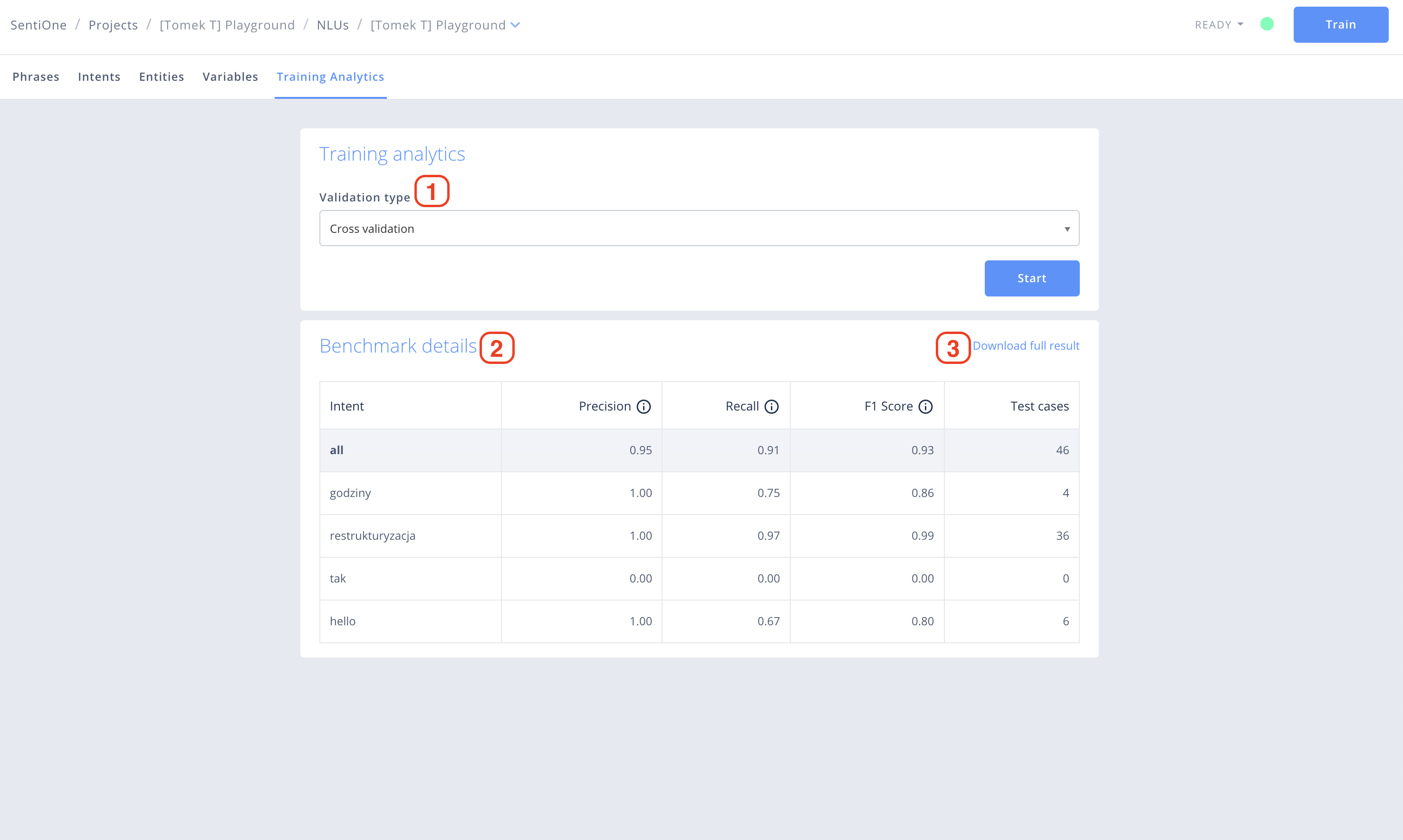

Verification results

After performing the test with any of the above methods, the results will be presented in the form of the Precision, Recall and F1 Score measures described below, calculated in relation to the entire test set of phrases (row "all"), as well as broken down into individual intents.

Additionally, you can "Downloads full report". It is an .xls file that contains a detailed list of expected and recognized intents with their scores for all tested phrases. File analysis allows you to identify incorrectly assigned phrases and train the engine with their contribution.

Statistical measures

The statistical measures, displayed as a result of the NLU Training Analytics, are based on the indicators relating to the correct and incorrect identification of intents, as illustrated in the table below with an example of recognizing "Confirmation" and "Negation" intents:

Phrases expected to match the intent “Confirmation” | Phrases expected | |

Phrases recognized with the intent “Confirmation” | Phrases correctly recognized as "Confirmation" (TP) | Phrases incorrectly recognized as "Confirmation" (FP) |

Phrases recognized with the intent “Negation” | Phrases incorrectly recognized as "Negation" (FN) | Phrases correctly recognized as "Negation" (TN) |

- Precision – determines the ratio of the number of phrases correctly recognized as an intent (TP) to the number of all phrases recognized as that intent (TP + FP).

- Recall (understood as effectiveness) - defines the ratio of the number of phrases recognized as an intent (TP) to the number of all phrases that should actually be recognized as that intent

- F1 Score – determines the average (geometric mean) result between the Precision and Recall measures.

Updated 4 months ago